Episode 1: Understanding Large Language Models (LLMs)

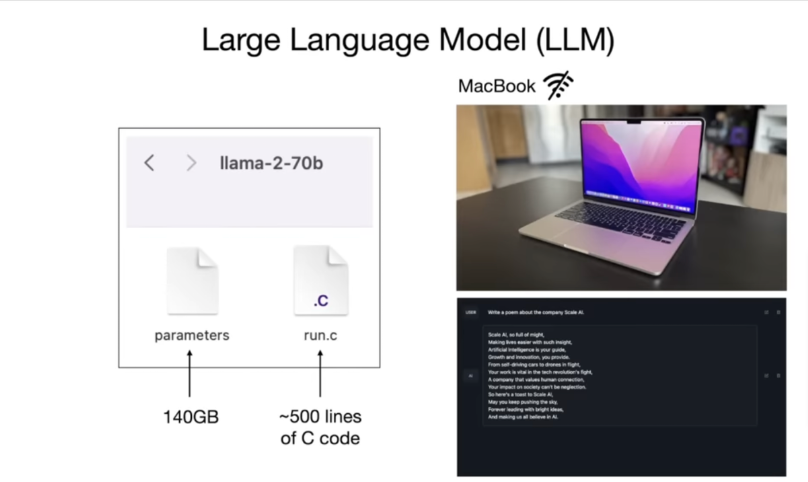

At their core, LLMs consist of two essential components in a hypothetical directory:

-A parameter file, which is essentially a neural network functioning as a language model.

-An executable code that operates the model; this could be written in Python or C for runtime execution.

These neural networks operate by predicting subsequent words, with parameters distributed throughout the network.

Each parameter is stored as 2 bytes of data, meaning a model with 70 billion parameters would require approximately 140GB of storage.

The code file activates this neural network, allowing the model to run independently of an internet connection

That’s the long & short of LLM’s in very crude & simplified way.

🔔For more such content, check all AI, Data Science & Machine Learning predictions for 2024 here:

I can be reached to answer any question at www.rohansharma.net

Linkedin: https://www.linkedin.com/in/rohanshram9

#ai #datascience #machinelearning #architecture #aiexecutive #aileadership #aileaders